The emergence of real-time interactive video streaming infrastructure as an essential alternative to the prevailing one-way streaming grid is set to usher in a new generation of ultramedia apps and services across the consumer and commercial markets.

This is a major milestone in internet evolution that has had an unusually long under-the-radar shelf life marked by a good deal of confusion, not to say misinformation about whether such an infrastructure is possible or even needed. And among those who have a burning need for real-time streaming, there’s been a good deal of uncertainty about where to turn for solutions.

The good and potentially market-moving news is that the fog is lifting, rapidly giving way to vivid demonstrations that there are now multiple solutions offering a way forward. Use cases putting viable real-time interactive streaming (RTIS) platforms into play are proliferating across the globe with the power to deliver massively scalable synchronized user engagements in high-quality interactive video applications with end-to-end latencies below 500ms and, in some cases, at or under 50ms.

A Maturing RTIS Supplier Ecosystem

As a result, there’s growing awareness that the internet can be equipped to make RTIS infrastructure the linchpin to the next chapter of life in cyberspace. Notwithstanding a cacophony of conflicting commentary about ultralow latency, it’s clear the experts responsible for running streaming operations understand the barriers to achieving the transition to RTIS wherever it’s needed are disappearing.

This was confirmed when the readers of Streaming Media, the leading trade publication devoted to streaming technology, picked RTIS platform provider Red5’s TrueTime solution portfolio as the best live streaming service in the 2023 Streaming Media Readers’ Choice Awards.

Note, this wasn’t about naming the best real-time streaming provider; it was about identifying the best live streaming solution, period. Also noteworthy is the fact that one of the two second-place winners in the live-streaming category was Dolby.io, another leading RTIS platform provider.

In addition to underscoring the fact that RTIS is now a viable option, these choices demonstrate the priority these streaming experts have placed on finding solutions that surpass conventional Hypertext Transfer Protocol (HTTP) -based approaches to live streaming. The urgency driving that search is apparent across an outpouring of use cases emerging in media and entertainment, telemedicine, education, public safety, the military and many other segments of the connected economy.

The plain truth is, when it’s impossible to run a video-rich application calling for interactive, sub-500ms connectivity, a CDN offering one-way transport at reduced end-to-end latencies in the 10-, 5- or even 2-second range is useless. For service providers who don’t understand well-proven RTIS solutions are at hand there’s a real risk of being late to the party as the shift to these platforms picks up steam.

As things now stand, anyone seeking to meet RTIS market needs can choose from multiple options that have moved well beyond the prove-in stage to commercial operations engaging millions of users worldwide. While their approaches vary widely, they’ve all found ways to put the point-to-point WebRTC (Real Time Communications) protocol to use at scales, quality and functionality beyond the scope of the standard as adopted by the Internet Engineering Task Force (IETF) and World Wide Web Consortium (W3C).

These RTIS platform providers shouldn’t be confused with services like Zoom, Google Meet and Webex that are strictly focused on meeting video conferencing requirements, including the communications platform-as-a-service (CPaaS) providers who offer video conferencing with virtual PBX and other non-video communications services. Here the focus is on platforms that are true bellwethers in terms of user scalability, use-case flexibility, feature enrichment, and quality of experience (QoE).

They include:

- Agora

- Amazon IVS

- ANT Media

- Cloudflare Stream

- Dolby Millicast

- LiveKit

- Wowza

- LiveSwitch

- nanocosmos

- Phenix Real Time Solutions

- Red5

- Vonage

Over time we’ll be profiling all of them as we report on use cases specific to each. But before looking under all these hoods, it’s useful to explore some of the market developments that have brought us to this point. This is what determines the performance parameters users should be looking for as they shop for solutions.

At minimum, they should expect the following requirements to be met:

- End-to-end max latency in all directions at any distance for most use cases: <500ms.

- Simultaneous stream reception across all end points.

- Optimum latencies for the most demanding use cases: <50ms.

- Support for SSAI, personalization and other current streaming functionalities.

- Support for features and video interactivity beyond the reach of conventional streaming.

- TV-caliber A/V quality with support for robust security (DRM, watermarking).

- Scale of live multicast audience: >1M.

- Scale of multidirectional video interactivity: >1K.

The New Streaming Transport Framework

All the applications now envisioned for RTIS in response to the market trends discussed below would be pie in the sky were it not for widescale adoption of the WebRTC protocol. WebRTC itself is a stack of signaling protocols built on the Real Time Transport Protocol (RTP), which also anchors IP-based voice communications.

In contrast to conventional HTTP-based client-pulled streaming, WebRTC is a peer-to-peer push protocol that relies on the User Datagram Protocol (UDP) rather than the Transmission Control Protocol (TCP) to transmit packets with no confirmation they’ve been delivered and no buffering awaiting resending of dropped packets. A wide range of forward-error-correction (FEC) and other mechanisms are employed to preserve transmission continuity without incurring the latencies common to use of TCP.

All the solutions that are playing important roles in the emergence of RTIS have devised ways to scale WebRTC, sometimes to accommodate ultra-low latency streaming in simultaneous synchronization to a million or more users. WebRTC’s dominance as the workhorse in RTIS stems in part from the fact that support for in-browser activation provided by all the major browsers, including Chrome, Edge, Firefox, Safari and Opera, eliminates the need for client plug-ins.

Adding to momentum-driving developments facilitating WebRTC adoption are two complementary protocols, WebRTC-HTTP Ingestion Protocol (WHIP) and WebRTC-HTTP Egress Protocol (WHEP). These innovations are meant to create standardized approaches to simplify mass scaling of WebRTC.

While still not formally finalized, WHIP and WHEP specifications have matured to a level of stability that has unleashed widespread adoption. Some RTIS providers have made them the default ingress/egress modes. In some cases, the default setting is focused on WHIP, leaving it to developers to determine how they want to deal with egress, a cluttered cloud segment boiling with cost-reducing options.

On the origination side, WHIP defines how to convey the Session Description Protocol (SDP) messaging that describes and sets up sessions allowing content streamed over WebRTC from individual devices to be ingested across a topology of media servers acting as relays to client receivers. This exploits the automated set-up capabilities of the WebRTC-optimized browsers. On the distribution end, WHEP defines the SDP messaging that sets up the media server connections with recipient clients, which can also use proprietary automated cloud connections that make WHEP irrelevant.

Projected RTIS Growth Tops 40% CAGR

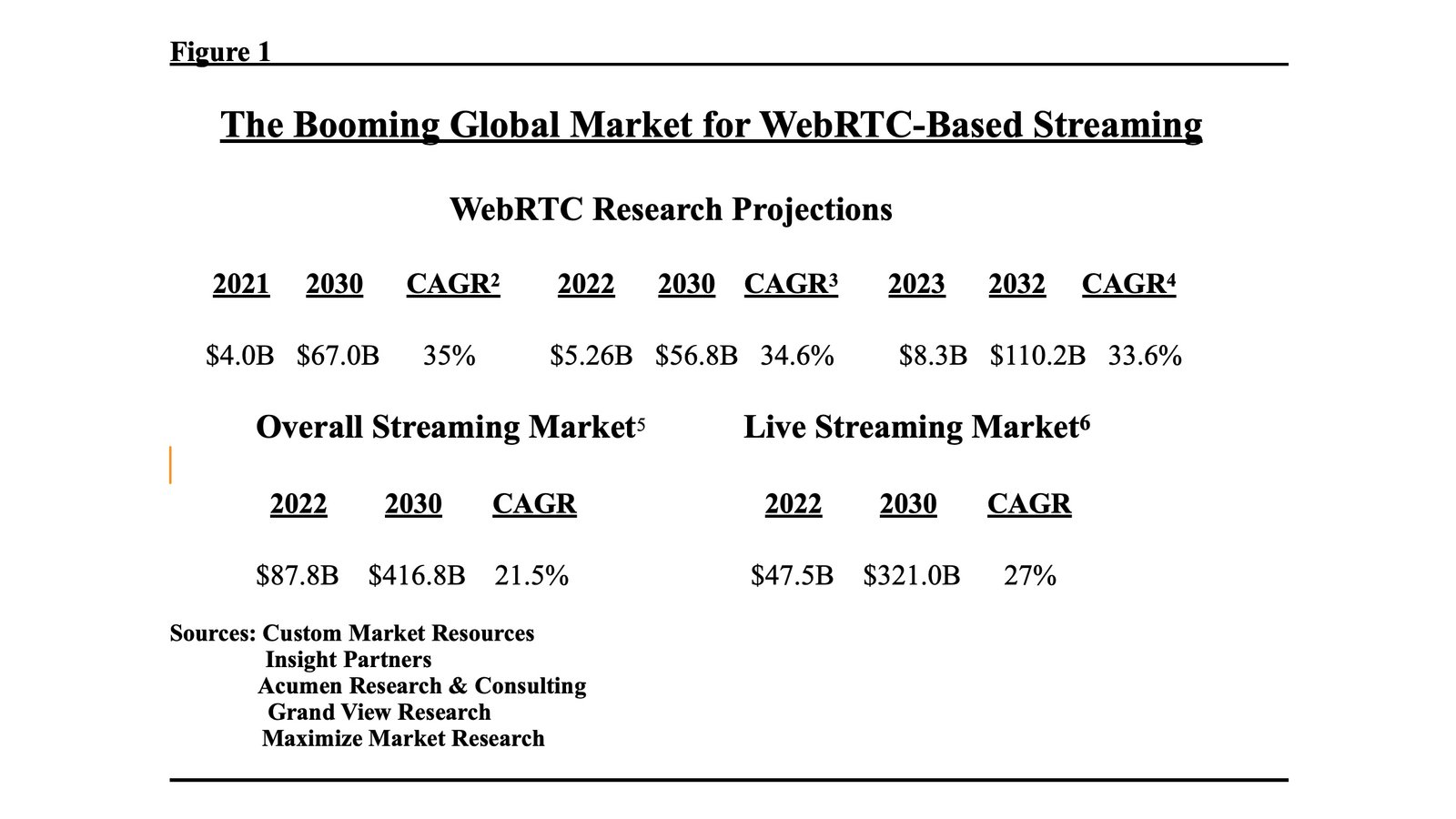

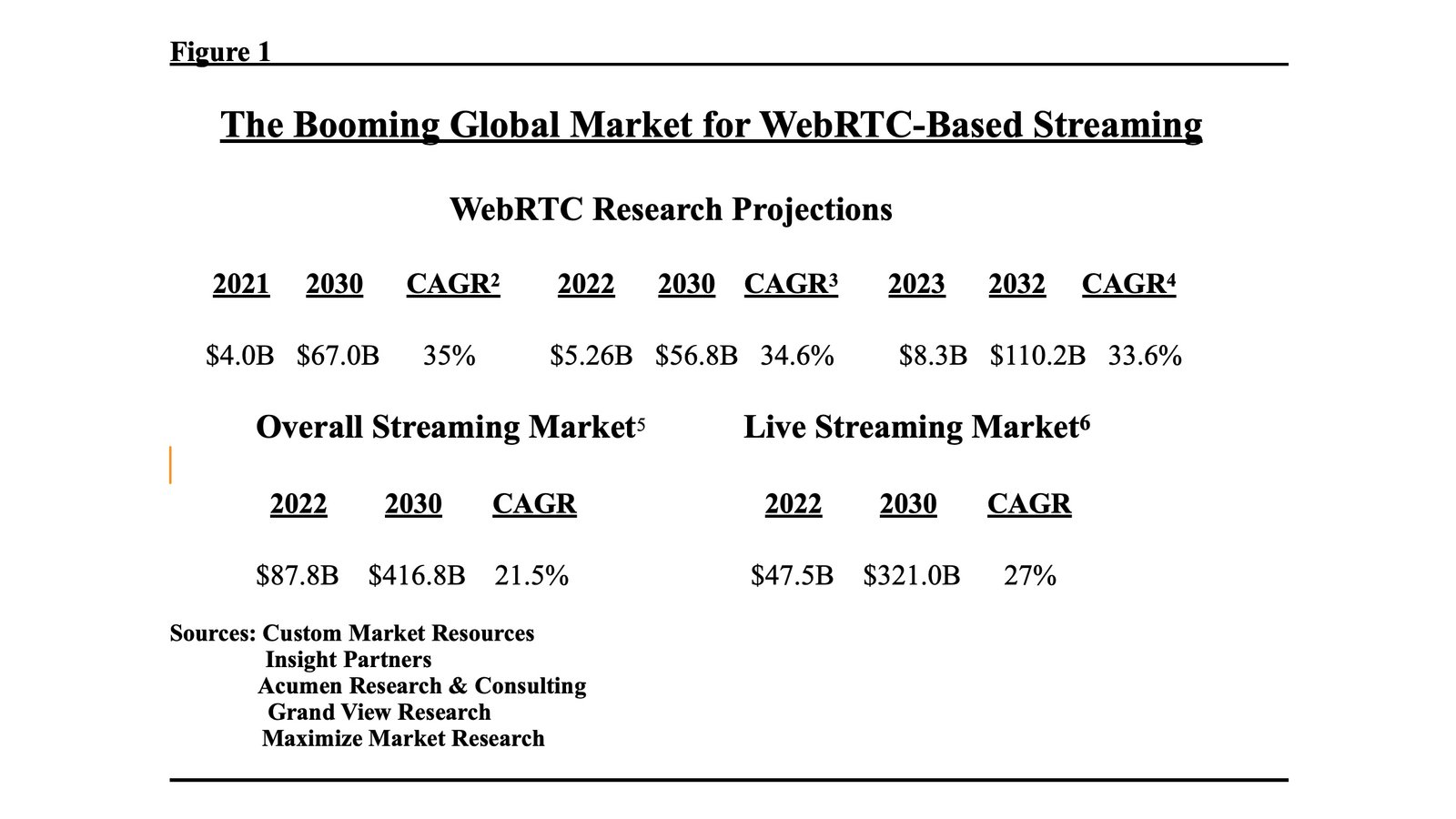

Multiple research studies confirm growth in the market for WebRTC-based solutions is far outpacing the overall streaming market growth rate and the live streaming segment as well with CAGRs in the 34%-35% range compared to low- to mid-20% growth rates. Figure 1 summarizes some of these findings.

Figure 1

The Booming Global Market for WebRTC-Based Streaming

Sources:

- Custom Market Resources

- Insight Partners

- Acumen Research & Consulting

- Grand View Research

- Maximize Market Research

Comparing how these growth rates are expected to line up over time even at this very early stage of WebRTC adoption, spending on WebRTC streaming is projected to increase from about 6% of the total streaming market in 2022 to 11% by 2030 and from 13% of the live streaming market to 20% over the same time span. Why this is the case can be seen in calculations of what sticking with HTTP-based streaming is already costing the world and what it would cost going forward.

Based on an assessment that each 100ms of video latency impacts 1% of streaming revenue, Rethink Technology Research has shared calculations with us that show just latency alone in live-streamed coverage of sports and other events will cost the M&E industry $2.8 billion in lost revenue annually between 2022 and 2026. That’s in line with another analysis sponsored by Red5, which found that losses from excessive latency in one-way live video streaming applications as of 2021 totaled $1.9 billion.

The latter also took into account what would be lost if all the emerging next-generation applications in consumer, enterprise and other market segments that can’t be implemented without the interactive component of real-time streaming weren’t activated as of 2025. Revenues at risk that year would total $75.9 billion, the study found.

The Multi-Market Explosion in RTIS Use Cases

Validation of what today’s RTIS infrastructures mean to internet ecosystem evolution is coming from all directions. At one head-turning end of the use-case spectrum we find at least one instance where U.S. defense contractors are using RTIS technology in mission-critical situations.

And growing numbers of police, fire and other emergency-response agencies are relying on RTIS technology to perform real-time synchronization and transport of multiple drone, terrestrial and underwater surveillance feeds for AI-assisted analysis at operations centers. For example, real-time crime centers where police analyze video surveillance, AI face recognition intelligence, license reads, and other feeds delivered in real-time to LED walls are springing up around the U.S.

At the other end of the RTIS use-case spectrum, innovations applying real-time interactive video streaming in consumer applications are moving the needle across the board. Transformations in consumer experience are impacting engagements with live-streamed sports and esports, social media, multiplayer gaming, music events, virtual casino gambling, sports betting and micro-betting, live auctions, in-stream ads, online shopping, and other forms of ecommerce. Video-infused remote participation in business, education, and healthcare is now the norm.

Critically, RTIS is also freeing the consumer and commercial extended reality (XR) sectors to pursue networked user experiences across multiple entertainment and enterprise market segments. In recognition of the practical implications of recent developments such as Apple’s launch of the VisionPro head-mounted device (HMD), the term “spatial computing,” long used in technical circles, is supplanting the more fanciful, hype-abused metaverse terminology as the best way to label the implications of the merging of XR, AI, laser-sensor, and other advanced technologies in market-moving applications.

Moving RTIS to the 5G Edge

Beyond what’s already been accomplished with RTIS platforms that can scale sub-500ms interactivity to vast numbers of simultaneous users at any distance, the most basic requirement to be met for a fully realized next-generation streaming infrastructure comes down to squeezing more milliseconds out of streaming and cloud-function latencies. In other words, we need to get as close as possible to where the primary limit on latency reduction is the speed of light.

A major step in this direction is shaping up to be the colocation of edge-based 5G control centers with access points to leading cloud computing platforms. Versions of the concept, which was pioneered in 2019 by AWS with launch of its Wavelength initiative, have also been announced by Microsoft Azure and Google Cloud.

While 5G, as widely touted by the cellular industry, significantly contributes to latency reduction in mobile interactions with the internet, the truth is these contributions are confined to the processes occurring in radio access network (RAN) operations, where multi-second delays incurred with 4G and earlier RAN technology have been cut to 1ms or 4-10ms, depending on the type of 5G architecture in play. But extremely low end-to-end latencies are unattainable over any type of 5G network when delays of anywhere from hundreds of milliseconds to multiple seconds are imposed by traffic traversing cell sites, metro and regional aggregation centers and the internet to get to and from cloud processing centers.

Those delays can be eliminated when traffic flows directly between cell sites and cloud computing centers via media-access edge computing (MEC) platforms like Wavelength, which, so far, appears to be the most widely used MEC with multiple MNO partners worldwide, including Verizon, Bell Canada, Japan’s KDDI, SK Telecom in South Korea, and Vodafone in Europe. In the case of Wavelength, these instantiations of cloud compute and storage services connect with AWS infrastructure running in 77 Availability Zones across 24 AWS Regions worldwide.

But once the packet stream starts flowing beyond the AWS cloud, then what? All the traditional impediments associated with HTTP-based streaming will kick in unless there’s an integrated AWS interface within the Wavelength Zone to RTIS infrastructure. So far, we’re aware of just one RTIS provider whose technology has been pre-integrated with a MEC provider, namely, Red5, which was certified as a pre-integrated Wavelength partner in 2022.

But we’re also aware that Phenix, another RTIS provider, is now touting use of Wavelength with its platform, although it doesn’t appear to have achieved pre-integrated status as yet. The guess here is that such integrations between multiple RTIS and MEC suppliers will become commonplace as 5G becomes ever more vital to next-gen compute-intensive applications requiring latencies in the 50ms range.

Integrating RTIS with Intelligence at the Extreme Edge

But even with universal RTIS sector adoption, these 5G edge initiatives won’t be enough to support full realization of the next-gen internet potential. That will require even deeper penetrations of miniaturized compute and streaming components that can be globally orchestrated with distributed cloud assets to support any piece of magic developers might have in store.

For example, extreme edge processing could instantaneously execute complex AI tasks applied on a per-user basis to cloud-processed content streaming over real-time infrastructure. Or, in instances where spatial computing is involved, processing at the extreme edge points could offload some of the rendering processes like ray or path tracing that might be too much for light-weight HMD processors to handle.

Whether or not XR is involved, interfacing computing with real-time streaming at extreme edge locations will have major implications for multiplayer game development, taking game playing to new levels by enabling simultaneous, delay-free participation in fast-action competition among players scattered across the globe. By leveraging edge computing to instantly transcode user input, developers will be able to include any webcam flows that may be used to enhance social interactions as games are played. Instant interpretations of user behavior will tap core and distributed cloud databases to deliver recommendations, intelligence to improve players’ performances, and other information pertinent to immediate personalized responses to players’ behavior.

In manufacturing, collocating end points for real-time video communications with intelligent processing will add new efficiencies by providing support for interactions between workers on the factory floor and centralized management. In industrial IoT applications, sophisticated processing at the extreme edge in conjunction with use of webcams could be coordinated with input from sensors to enable much better results in automated maintenance operations.

Examples of progress in the direction of extreme edge computing abound. In one case in point, some of the most important advances supporting execution of latency-sensitive use cases have been implemented by AWS through its portfolio of Snow-branded products. These devices can be optimized for computing or storage to implement functions in close proximity to points of data generation.

At the chip-processing level Nvidia, the leading supply of AI-optimized GPUs, has introduced the Jetson AGX Orin Industrial module as an extreme-edge AI and robotics platform hardened for deployments in harsh environments. On the game-playing front, Nvidia has demonstrated how developers can leverage its GeForceNow Developer Platform SDK with RTIS to run remote playtest sessions with multiple users, tracking not only game play, but visual streams of the gamers themselves.

Qualcomm, too, is breaking new ground with its Edge-Anywhere initiative. In the 5G realm, Qualcomm is introducing miniaturized compute form factors to enable edge servers and device processors to work in concert to support the most latency-sensitive use cases. More broadly, the company is producing systems-on-chips (SoCs) designed to facilitate direct connectivity between RTIS infrastructure and processing for spatial computing, surveillance, and other use cases.

In another high-profile example of what’s afoot, IBM’s container-based Edge Application Manager platform has been designed to enable data, AI and IoT workloads to be deployed where data is collected in order to provide analysis and insight that can be delivered to customers in real time. Big Blue has partnered with Acromove, Inc., a provider of “Edge Cloud in-a-Box” solutions, to serve clients who want to deploy, in IBM’s words, “hjghly mobile geo-elastic edge data centers in any environment within minutes.”

The developments discussed here are just a hint of what’s in store as RTIS and computer processing merge at extreme edge locations. Any analysis of how the many RTIS providers compare must take into account not only their capabilities as enabled in current iterations of their infrastructures but also how well positioned they are for migration to the requirements of next-generation edge computing.

The Essential Benefits of Cross-Cloud Distributed RTIS Architecture

A good place to start in that analysis is with an aspect to RTIS design that bears on both current performance and the ability to operate effectively in the future scenarios described above. While there’s definitely a need for turnkey Platform-as-a-Service (PaaS) options that allow users with generic sets of requirements to take advantage of RTIS infrastructure on a click-and-go basis, there’s a greater need for choice in where and how solutions are deployed.

Service and applications providers require distribution and creative flexibility that can only be attained with a decentralized, hosting-agnostic approach to deploying RTIS in whatever cloud environments work for them. There are many reasons even a fairly routine use of RTIS infrastructure is better served with access to cross-platform flexibility.

For example, running a solution on one of the big-three cloud compute systems might be all that’s needed now, but other cloud compute platforms competing aggressively with the leaders for market share are coming up with innovations that lower costs and add functionalities that could merit shifting traffic into their domains. Or perhaps there’s a need to use another cloud infrastructure provider to reach market sectors through latency-reducing edge proximity that’s not available from the RTIS provider’s primary cloud provider.

A real-time streaming platform that’s only available in a PaaS locked-in cloud environment fails to satisfy these requirements. Moreover, an equally significant consideration has to do with developers’ needs to have the flexibility to customize their apps beyond the pre-built templates on offer. An API-only approach eliminates access to programmable components that enable deep integration of essential use-case functionalities.

For example, a PaaS API designed exclusively for apps that bring people together in cyberspace is likely ill-suited to building apps like those discussed above related to surveillance and emergency responses. Or, to cite another example, PaaS APIs may be unsuited for custom use cases involving AI technology.

Even when human interactions are central to a developer’s app, there may be a need to create more complex functionalities through customizations not supported by a PaaS. For example, in some use cases customization requires a modification in the server software or even creation of unity SDKs devoted strictly to a unique application.

This requires engineering support, which may be missing with PaaS providers who focus on pre-built applications. A PaaS designed to be useful to customers who want a ready-to-use approach to RTIS must not only have the flexibility essential to customization; there should be in-house professionals available to help when needed.

Success-Based Pricing

Cost can be a big barrier to implementing new streaming infrastructure, but it doesn’t have to be, especially if there are important use cases with significant revenue-generating potential, including the potential to deliver competitive advantages. Costs can get out of hand when PaaS providers employ usage-based pricing models that pass along to customers their own high cloud platform costs by charging for every participant or audience use minute. Perceptions that RTIS costs too much are driving high churn rates on some platforms and dampening enthusiasm for new engagements with those providers.

RTIS infrastructure users should not be penalized for success, nor should they be burdened by hidden costs stemming from those portions of a commonly used infrastructure they don’t use. A much better model allows the customer to pay for the infrastructure they need based on how many nodes are employed on a given cloud platform to accommodate their strategies.

Once they’ve paid for the infrastructure, they should not have to pay for how much it is used. That allows them to build revenue-generating models that don’t pass excessive costs on to their customers, ensuring they can exploit the advantages of offering their customers a much more appealing cost-benefit option than their competitors. With success, the RTIS application provider’s ROI goes up, not down.

Support for DAI & Other Requirements

RTIS infrastructure must also take into account the need to support dynamic server-side ad insertion (SSAI). Dynamic advertising with the ability to match ads to targeted audience profiles is fast becoming the dominant mode of monetization in consumer applications of streamed video.

Development of SSAI in the HTTP streaming world after a long, hard slog has reached a level of performance which, while far from the reliability of traditional TV advertising, has proven good enough to draw the higher revenues ad targeting generates from advertisers eager for better results. A RTIS platform that can’t satisfy this demand will have a limited future in the mass consumer market.

There are many other considerations that will impact app developers’ and service providers’ choices of RTIS providers. The most important things they’ll be looking for beyond what we’ve discussed so far include the extent of support for:

Overall performance reliability with fail-safe redundancy fundamental capabilities related to scalability,

Multiple video resolutions and codecs;

Transcoding enabling bitrate adjustments to bandwidth availability through use of ABR profiles.

Integration with conventional CDNs.

Multiple SDKs, not just for HTML5, iOS and Android development environments but for purely mobile environments and the Unreal and Unity developer environments as well,

Plug-ins like Xamarin, React Native, and Cordova.

Ingestion and streaming of protocols other than WebRTC in real time, including RTMP, RTSP, SRT, and MPEG-TS Unicast and Multicast.

Automatic fallback to packaging for HLS streaming in instances where devices lack support for real-time options.

Concluding Observations

Thanks to the emergence of multiple massively scalable real-time interactive streaming platforms, we now have the makings of a next-generation multi-provider streaming grid that will allow ever-wider adoption of use cases heralding a new era in internet engagement. An explosion of commercial activity leveraging these platforms makes clear the transformation in everything from streamed entertainment to the vast realm of activity touched by spatial computing can move forward on a WebRTC-based foundation.

But as things stand now there are severe limits to how far the world can go in that direction, which have nothing to do with WebRTC or finding some other solution. What’s needed is the strategic vision and market capitalization that will move the existing crop of RTIS platforms and any others entering the fray toward meeting all the requirements essential to bringing the new era to life.

Widely achieved sub-500ms end-to-end latencies in globe-spanning interactive engagements running on these platforms are just a beginning. Ultimately, RTIS will have to be coupled with distributed cloud computing in pervasive deployments of network components at or near residential and business premises if we are to get rid of the latencies in streaming and cloud-orchestrated intelligence that impede the seamless flow of activity between real and virtual spaces.

As RTIS buildouts move in this direction, it’s essential that other impediments common to many platforms be lifted as well. Unfettered innovation requires that developers be free to implement their applications unbound by cross-cloud restrictions and the exorbitant costs of usage-based pricing in PaaS environments. Support for dynamic advertising must be a given attribute across multiple RTIS platforms if service providers are to go beyond niche uses.